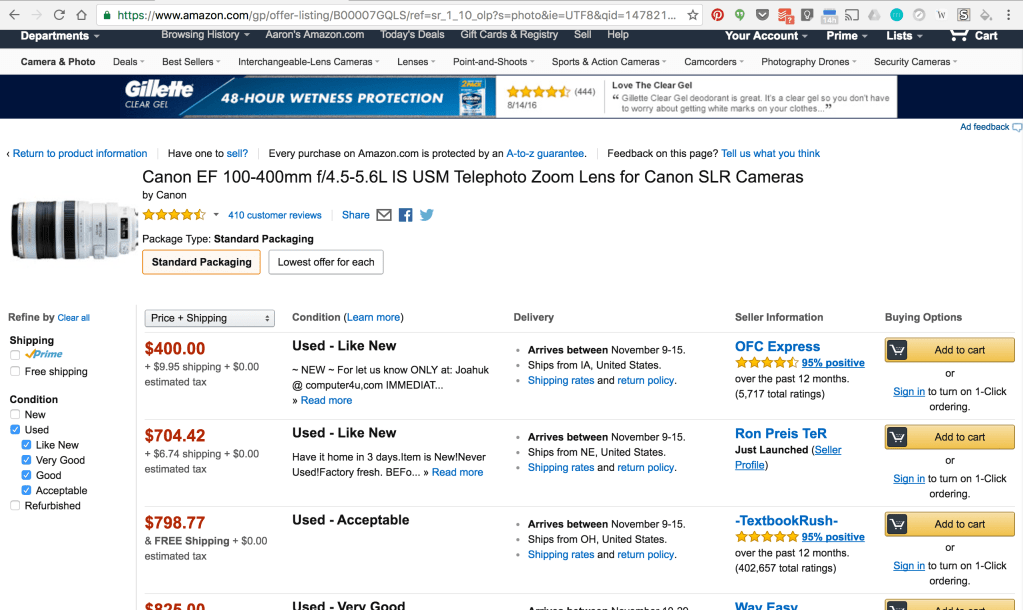

In the spring of 2017 I began doing research at the Security Economics Laboratory at the University of Tulsa, where my focus was a project called Scamazon. The purpose of Scamazon was to attempt to quantify fraud on the Amazon.com marketplace— where users can have their own “storefronts” to sell their items to other customers (a bit like Ebay.) Sometimes sellers would try to hawk fake items or steal credit card information from unwary buyers, and we wanted to see just how prevalent this kind of fraud was.

I learned the basics of the Selenium web-crawling library for Python, and built my own rudimentary crawler that would traverse Amazon and pull data from various searches, outputting statistics for the number of fishy results it found. During the first month or two of development, it seemed like there was a pretty high percentage of malicious listings, but the results died down fairly quickly, presumably as Amazon improved their own algorithms to detect and deter scams.

Still, this was a great exercise in A) learning how to crawl the web and scrape its data, as well as B) more general research methods for computer science. Also, fraud is ever-evolving— so maybe someday I’ll boot up the scraper again or try to improve this project to see if there are any new kinds of scams to detect! For now, you can check out the GitHub repo here.